Blogs

HMD UX testing – Introducing absolute tracking of XR content

Maturity of AR/VR/MR devices is improving significantly during 2019. In order to offer seamless UX for consumers, more comprehensive testing methods are needed. A novel content tracking method for AR/VR/MR testing purposes is introduced by OptoFidelity, the leading test solution provider for HMD UX testing.

In 2019, we can expect a wealth of exciting virtual and augmented reality devices arriving: for example, Oculus is going to release the standalone Quest headset, and Nreal raised $15M of funding to produce a sunglass-sized AR headset. In CES 2019, there were almost a hundred exhibitors in the AR/VR Gaming category.

Quick development of the new technology gives rise to the need to verify performance in product development as a part of continuous integration. This post focuses on measuring head tracking accuracy, which is comprised of many measurable elements: drifting, jitter, motion-to-photon latency, cross-axis coupling… you name it!

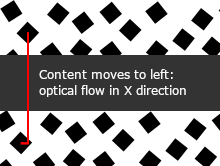

Kick-start for HMD UX testing was done in 2017, when OptoFidelity's first offering BUDDY-1, previously known as VR Multimeter was launched. BUDDY-1 is a solution for benchmarking the motion-to-photon latency with one degree of freedom. Our BUDDY testers are equipped with a smart camera which captures and analyzes the frames displayed on the headset. BUDDY-1 tracks the optical flow of a target pattern placed in the virtual world and compares that to the physical rotation angle over time, yielding motion-to-photon time. BUDDY-1 is a good work horse for basic motion-to-photon latency measurement e.g. to catch some fatal performance regressions.

Since the introduction of BUDDY-1, the VR/AR headset technology has advanced a lot. As well we have received a lot of feedback from our customers, how they would like to measure HMD UX and what would they expect from HMD UX testing solutions. To respond to increasingly complex testing needs, we came up with BUDDY-3 which is designed to measure head tracking accuracy with three rotational degrees of freedom.

An illustration of the BUDDY-1 target texture and optical flow between consecutive frames.

Since the introduction of BUDDY-1, the VR/AR headset technology has advanced a lot. As well we have received a lot of feedback from our customers, how they would like to measure HMD UX and what would they expect from HMD UX testing solutions. To respond to increasingly complex testing needs, we came up with BUDDY-3 which is designed to measure head tracking accuracy with three rotational degrees of freedom.

For BUDDY-3, we invented a new kind of a test content: a so-called marker constellation, which is a sphere covered with markers. In this test content, the virtual camera is at the origin of the sphere – wherever the user looks, several of these markers are seen. This allows us to detect the complete three-dimensional orientation of the virtual content from a single frame shown on the headset display, with no dependence on previous frames. This method enables the measurement of drifting, jitter, motion-to-photon latency and other tracking inaccuracies on all three rotational axes – well beyond the capabilities of BUDDY-1 and the optical flow -based measurement.

![]()

Left: illustration of the marker constellation from the outside. The virtual observer is in the center of the sphere. Right: constellation on the headset display, as seen by BUDDY-3’s camera.

The markers are circles with an embedded binary code which makes them identifiable. Their design is reminiscent of ShotCode markers, which are intended as a barcode replacement. The smart camera first detects 2D coordinates of the marker center points and decodes the binary codes. Then, the 2D coordinates are mapped to the original 3D marker coordinates. From these 2D-3D point pairs, it is possible to calculate the 3D orientation of the content and present it as yaw, pitch and roll angles in the results. This problem is mathematically akin to the Perspective-N-Point problem, and thus similar methods apply here.

![]()

Yaw angle of VR content and BUDDY-3 head over a forty-second period. The robot head rotates back and forth along the yaw axis (horizontally). After several of these rotations, the difference between the robot and content angles starts to increase due to drifting of the head tracking.

From the absolute tracking data, we get a fine-grained picture of the head tracking behavior and content motions over time. In the yaw angle plot above, drifting of the content can be immediately recognized – the content yaw angle does not return to the starting angle after completing the motion sequence, but is left at a slightly different value by a few degrees. Similar robot vs. content angle plots are also acquired for the two other angles, pitch and roll.

Drifting is very distracting for the user at its worst – the content may move slowly on its own or just not return in the original position after turning the head. Various technical challenges cause drifting. For simple mobile devices with few sensors, the magnetometer is interfered by surrounding metal. For sophisticated devices with cameras and inside-out tracking, different, possibly reflective materials in the surrounding space pose a problem. Getting the devices working consistently in every living room is a real challenge for the manufacturers to tackle. Here our testers help by providing repeatable motions and objective measurement data.

There are many more phenomena that can be seen from the data produced by BUDDY-3: motion-to-photon latency and jitter among others. We are eager to help our customers find the defects and make their devices better. And there is more to come in HMD UX testing. While the OptoFidelity™ BUDDY testers aim at temporal measurements, we also have a solution for spatial measurements: for testing headset display (near eye display testing) and optics in the production line, check Display Inspection and calibration.

Written by