Blogs

Apple Vision Pro Benchmark Test 2: Angular Motion-to-Photon Latency in VR

Virtual content motion-to-photon latency is one of the most important factors when considering immersion. When we move, our brains expect to see a corresponding change in our visual perception. If those don’t match, we experience a phenomenon called sensory mismatch. The mismatch can be generated in multiple different ways and with multiple different senses and lead to discomfort and nausea.

In this test case, we concentrated on how well angular motion and displayed image motion match each other.

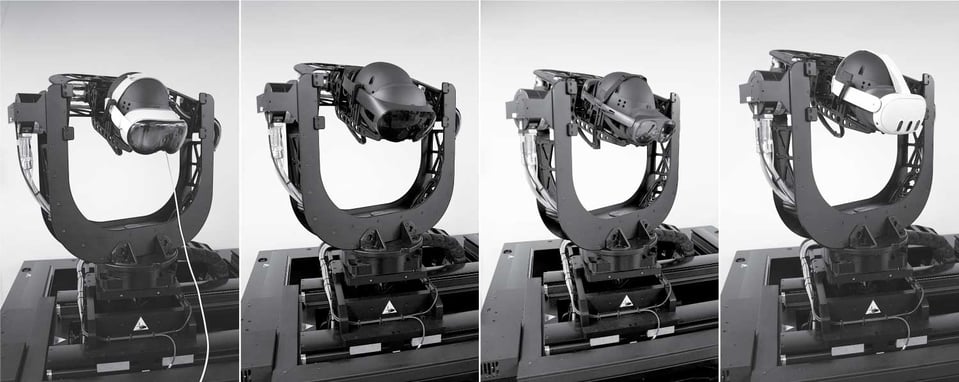

How we measure angular Motion-to-Photon Latency with OptoFidelity BUDDY

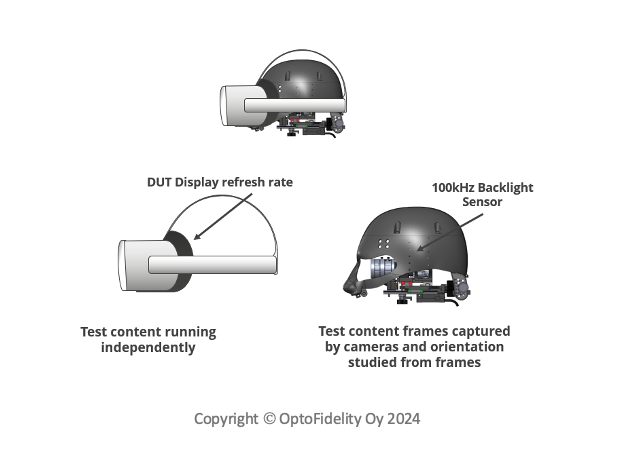

The main factors in this latency test are time and orientation angle. We want to know to which angle the head-mounted display (HMD) is facing in the real world and to which angle the virtual content is facing at that same moment in the rendered frame. Initially, the test measures these factors from each frame to provide data for the latency analysis in post-processing.

The motion-to-photon test is non-intrusive, meaning we only monitor changes on the headset screen using the machine vision modules. The measurement cameras use a proprietary method for detecting the display backlight refresh rate and dynamically adapt to the small interval changes in the image feed. This keeps a constant sync with the headset display, ensuring perfect timing to capture each headset frame.

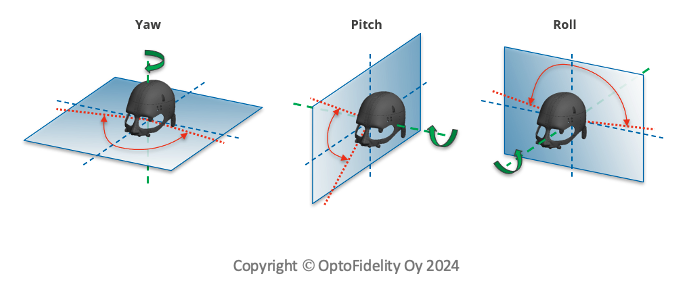

In the angular motion to photon, the compared signals are one-dimensional. These signals are robot axis encoder position and content pixel shift per degree. Each rotating axis, yaw, pitch, and roll, is measured separately to prevent pixel shift bleed from the other two rotating axes moving at the same time. A further limitation of axis bleed is achieved with camera centerpoint calibration that removes any coaxial offset between the virtual and measurement camera.

In the angular motion to photon, the compared signals are one-dimensional. These signals are robot axis encoder position and content pixel shift per degree. Each rotating axis, yaw, pitch, and roll, is measured separately to prevent pixel shift bleed from the other two rotating axes moving at the same time. A further limitation of axis bleed is achieved with camera centerpoint calibration that removes any coaxial offset between the virtual and measurement camera.

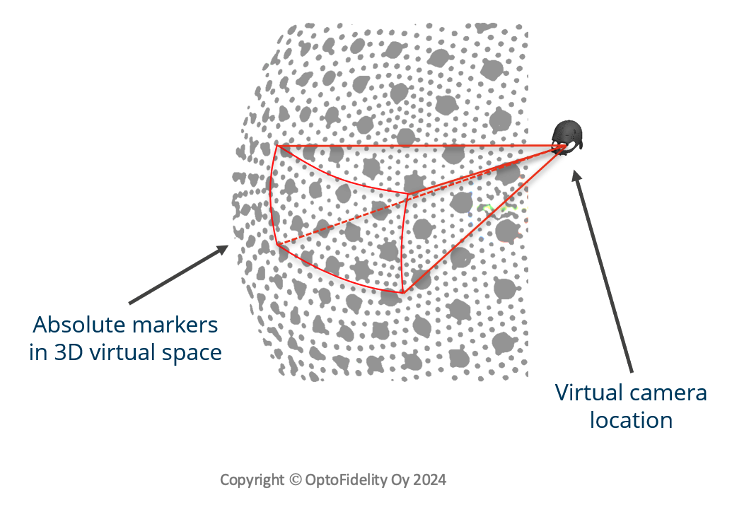

Unlike in see-through measurement, there are no external signals fed into the headset, no targets shown, and no flashing lights. The measurement uses a native or OpenXR application of rendered absolute markers in 3D virtual space. The absolute marker constellation is rendered to infinity, removing any possible XYZ drift, giving a pure angular response of headset performance. Each combination of 3 markers is unique, representing the absolute direction of the virtual world in each detected and captured frame.

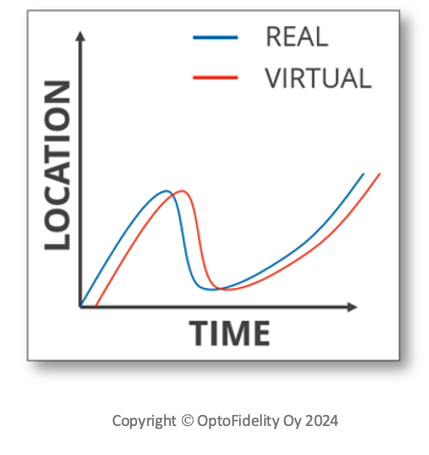

Each rotating axis is moved in a back-and-forth sequence, and each axis's one-dimensional sinusoidal signals are slid in time to see how much there is delta between the encoder position and the content, hence revealing the angular motion to photon of each axis.

The Benchmark Results

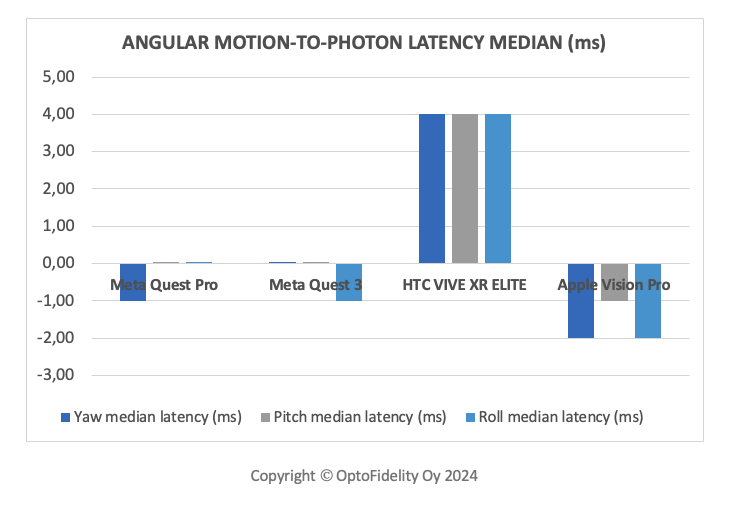

Angular motion-to-photon latency medians (in milliseconds) can be seen below.

All the test subjects perform relatively well in terms of Motion-to-photon latency (MTP). HTC's 4ms response time is slightly higher than expected, but it's unlikely to be noticeable to the human eye. Meta has consistently performed very well in MTP Latency tests since the release of Quest 2. It comes as no surprise that Quest Pro and Quest 3 excel in this test, given Meta's extensive experience in fine-tuning their time-warp algorithms. Apple Vision Pro performs extremely well, too, with perhaps a little bit of aggressive prediction and/or time warp. However, differences of this magnitude cannot be sensed by the human eye.

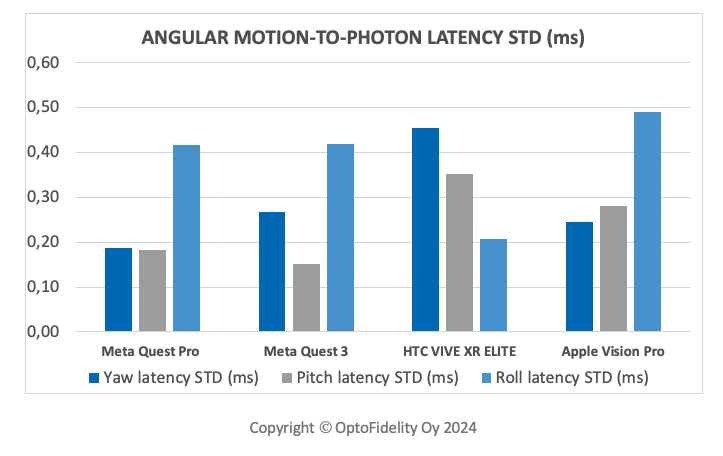

While previous latency studies the continuous and constant median latency, the standard deviation also contains the fast and short-term local latency changes. See picture below.

From the graph, we can see that the differences between single HMD axes can be larger than the differences to other devices.

It can be concluded that Meta’s Quest Pro and Quest 3 perform best in the group with very similar results. However, Apple Vision Pro is definitely not far behind and might be able to reach Meta’s levels by finetuning prediction and time warp algorithms.

Check our other Apple Vision Pro Benchmark Tests:

Written by